Mage-AI Exploration: Data Pipeline Development In Your Laptop

What is Mage-AI?

Mage-AI is a modern data pipeline tool that combines the interactive nature of notebooks with production-ready modularity. Think of it as a bridge between data exploration and production deployment, designed to make data workflows accessible to technical and non-technical users.

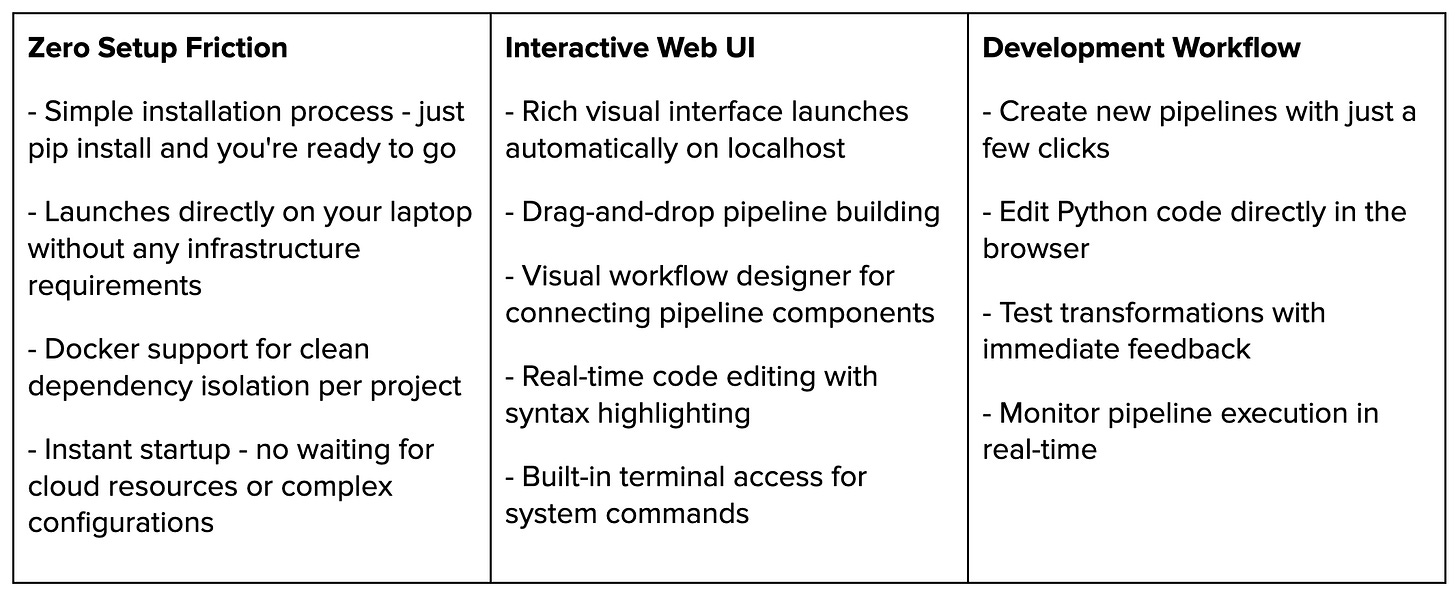

Key features that make it stand out:

Visual pipeline builder

Rich connector ecosystem

Local-first development approach

Production-ready code structure

Local Development Experience

The local development experience with Mage-AI is remarkably straightforward and powerful. Here's what I discovered during my exploration:

While the overall experience is smooth, some areas present opportunities for future enhancements:

No direct UI interface for package management - couldn't locate UI options for running pip install or similar dependency commands

Terminal functionality needs further testing to understand its full capabilities

Package management workflow could be more integrated into the UI experience

Connector Ecosystem

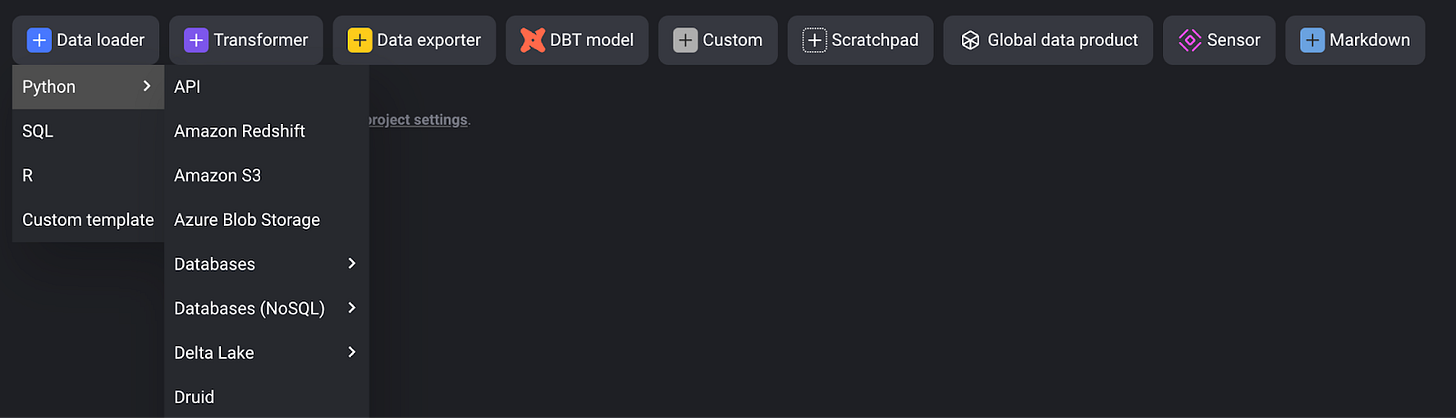

Mage-AI's connector ecosystem is built around three main components: Data Loaders, Transformers, and Exporters. Let me break down what I discovered during my exploration:

Data Loaders & Exporters

These components share a rich set of connectors supporting:

Databases: MySQL, PostgreSQL, MongoDB

Cloud Storage: AWS S3, Google Cloud Storage, Azure Blob Storage

Data Warehouses: Snowflake, Redshift

Lake Houses: Delta Lake

SaaS Platforms: Google Sheets, Google Drive

What makes these connectors powerful is their flexibility:

Support for Python, SQL, and R

Custom template creation capability

Direct SQL query execution on databases

Integration with query engines (Trino, Snowflake, Spark)

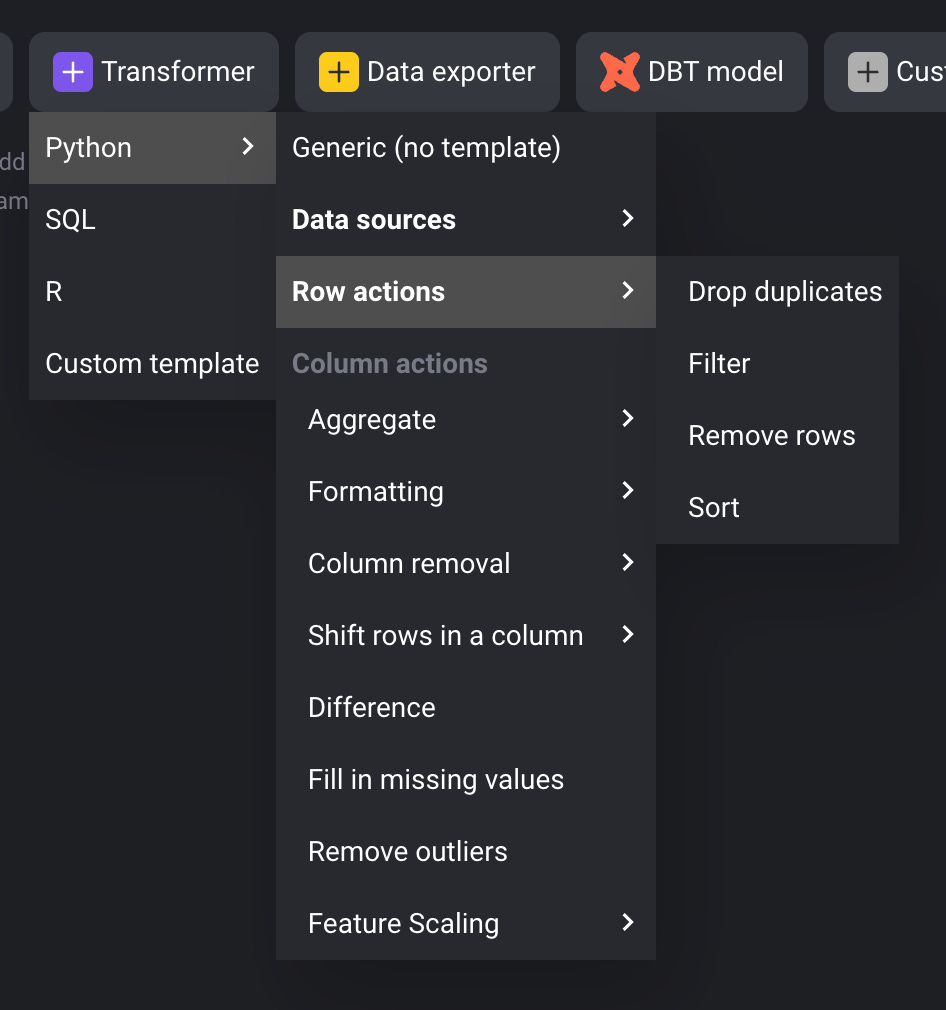

Transformers

The transformation layer offers both built-in and custom options:

Built-in Transformers:

Drop duplicates, filter rows, sort data

Sum, count distinct, group by operations

Fill in missing values, handle nulls

Basic column operations (rename, drop, select)

These pre-built transformers eliminate the need to:

Write repetitive code

Copy-paste common transformations

Maintain similar logic across pipelines

Beyond the built-in options, you can write custom Python code (Generic - no template) to handle any complex transformation logic your pipeline requires.

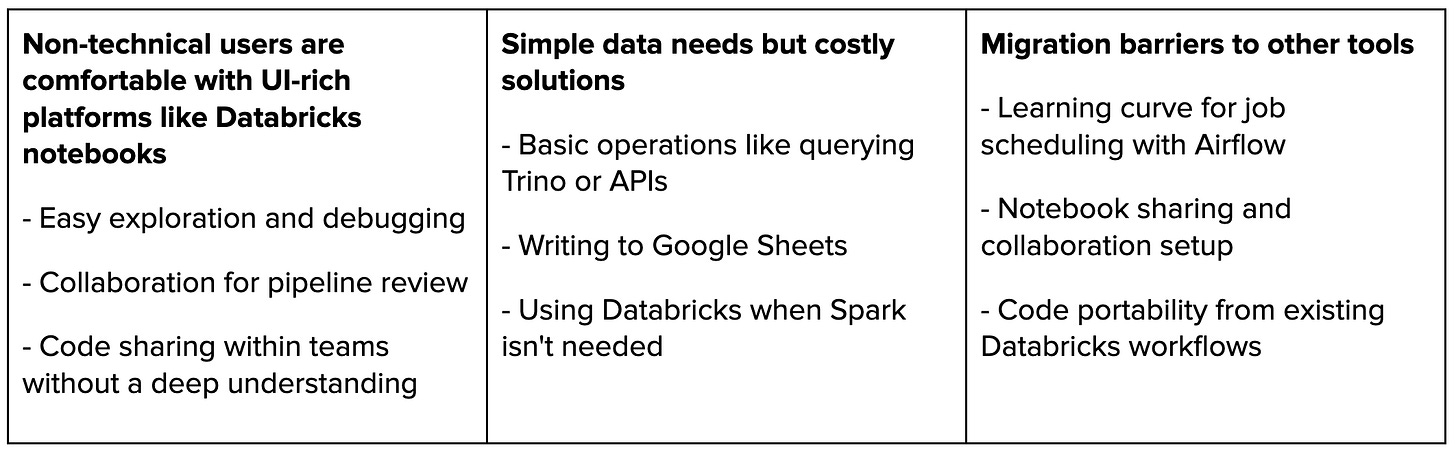

Real-world Use Cases

One of the most practical applications I've seen is from operational teams who rely heavily on Google Sheets for their daily work. Here's how Mage-AI transforms their workflow:

The Challenge

Many operational teams are locked into enterprise platforms that are powerful but overqualified for their straightforward data pipeline needs. Here are the points:

The Solution with Mage-AI

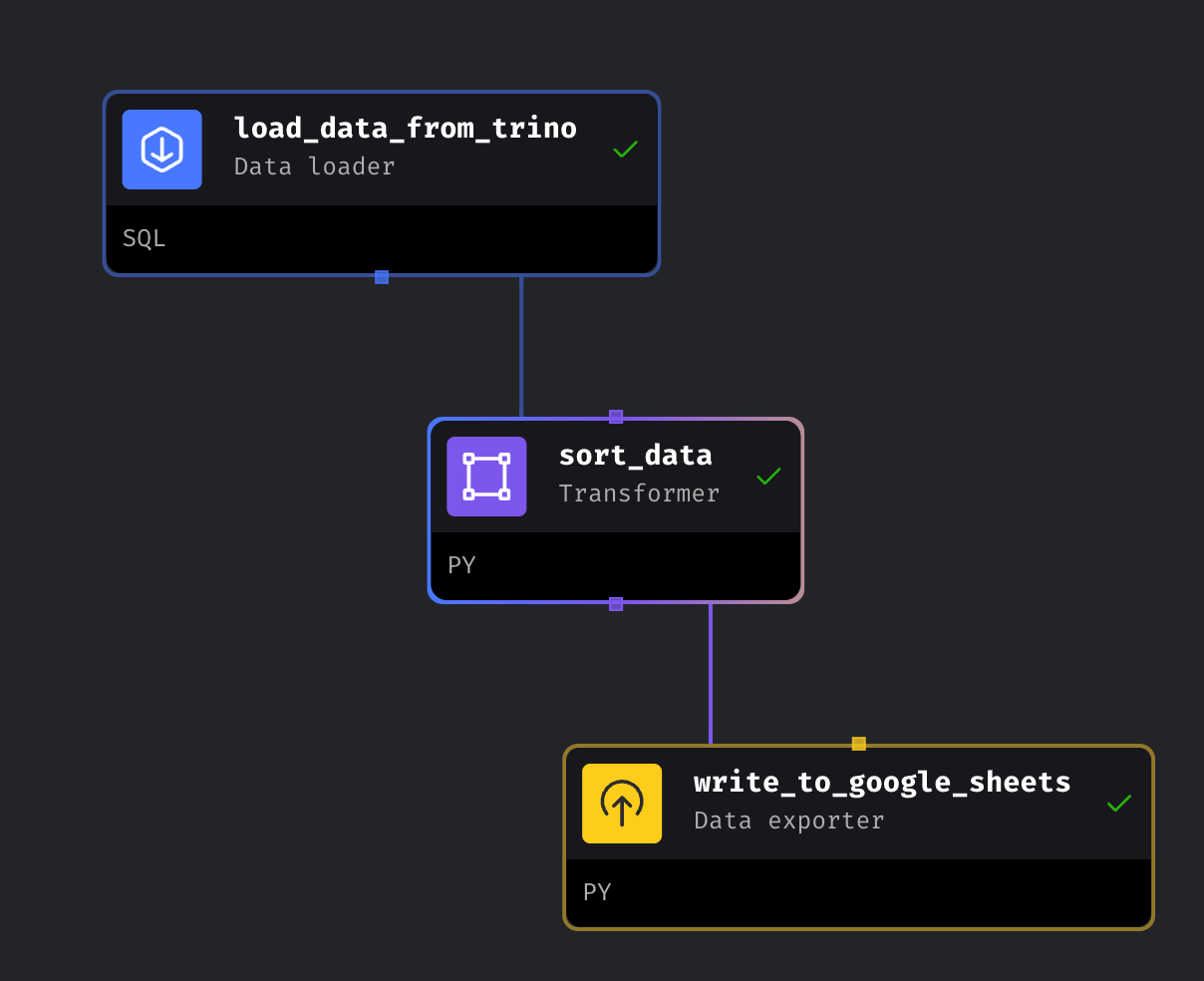

Let me walk you through a simple 3-step pipeline I built that demonstrates how easy it is to replace a Databricks workflow:

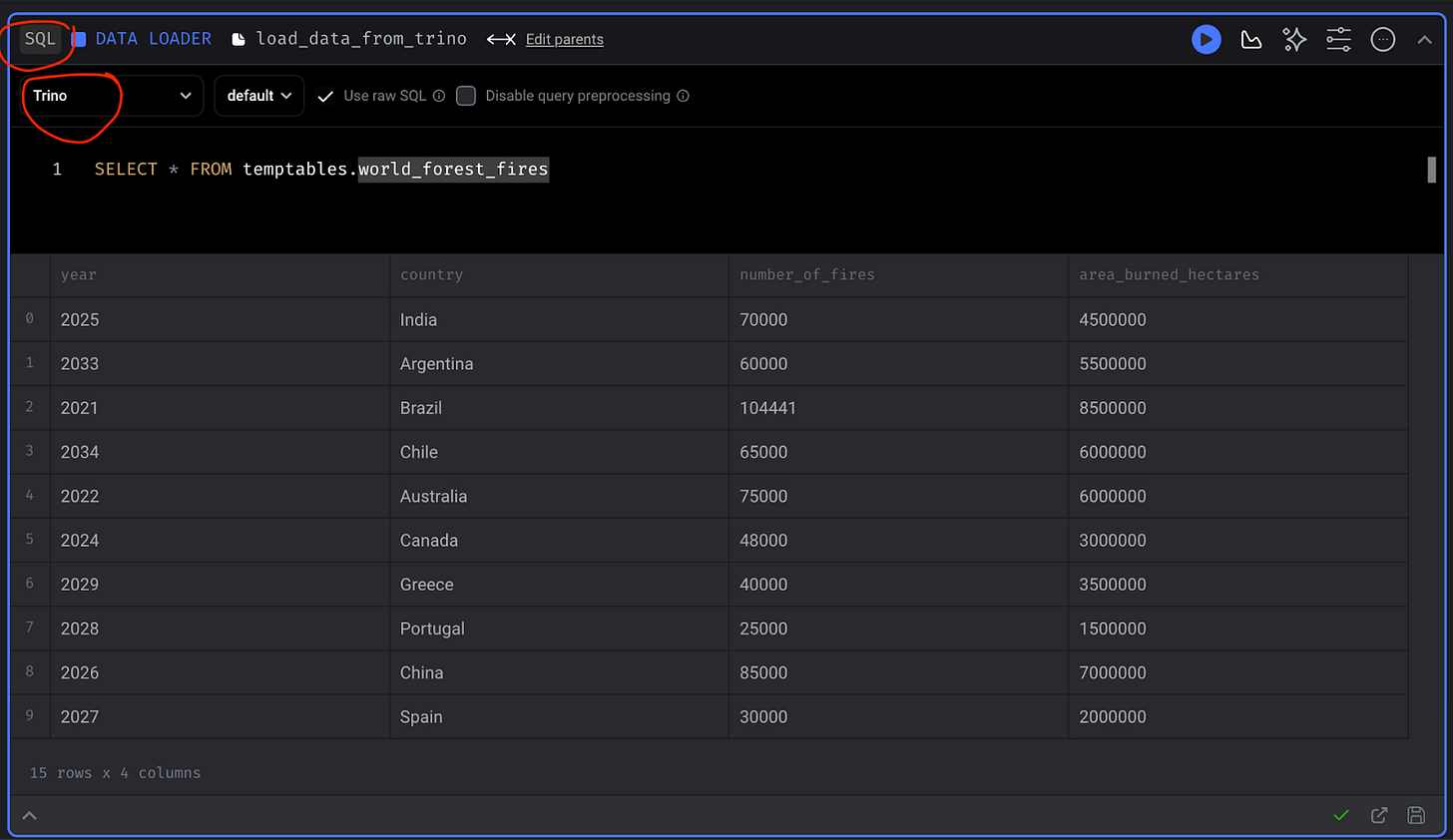

Data Loader: Load Data from Trino

Mage-AI provides a SQL template for loading data from Trino

Select "SQL" data loader from templates

Choose Trino as the connection type

Connection details are pulled from the global config file

Write your SQL query in the editor

Test query and preview results directly in UI

Data loads into pipeline memory for the next steps

Transformer: Sort

In this example, I leveraged Mage-AI's built-in sort transformer to order the dataset by year in ascending order. Whether you need simple transformations like sorting or complex data manipulations, Mage-AI offers built-in transformers and the flexibility to write custom Python code.

Data Exporter: Write To Google Sheets

Mage-AI makes exporting to Google Sheets straightforward:

Select "Google Sheets" from exporter templates

Template code is auto-generated for you

Configure the sheet_id

Setup Google connection: Using service account credentials

@data_exporter

def export_to_google_sheet(df: DataFrame, **kwargs) -> None:

"""

Template for exporting data to a worksheet in a Google Sheet.

Specify your configuration settings in 'io_config.yaml'.

Sheet Name or ID may also be used instead of URL

sheet_id = "your_sheet_id"

sheet_name = "your_sheet_name"

Worksheet position or name may also be specified

worksheet_position = 0

worksheet_name = "your_worksheet_name"

Docs: [TODO]

"""

config_path = path.join(get_repo_path(), 'io_config.yaml')

config_profile = 'default'

sheet_id = '<FILL IN YOUR GOOGLE SHEET ID HERE>'

GoogleSheets.with_config(ConfigFileLoader(config_path, config_profile)).export(

df,

sheet_id=sheet_id

)Set up your Google connection by creating a service account, enabling the Google Sheets API in your Google project, and sharing the target sheet with the service account email (Editor permission).

Add service account credentials to Mage-AI config (io_config.yaml)

version: 0.1.1

default:

GOOGLE_SERVICE_ACC_KEY:

type: service_account

project_id: "{{ mage_secret_var('google_sheets_project_id') }}"

private_key_id: "{{ mage_secret_var('google_sheets_private_key_id') }}"

private_key: "{{ mage_secret_var('google_sheets_private_key') }}"

client_email: "{{ mage_secret_var('google_sheets_client_email') }}"

client_id: "{{ mage_secret_var('google_sheets_client_id') }}"

auth_uri: "https://accounts.google.com/o/oauth2/auth"

token_uri: "https://oauth2.googleapis.com/token"

auth_provider_x509_cert_url: "https://www.googleapis.com/oauth2/v1/certs"

client_x509_cert_url: "{{ mage_secret_var('google_sheets_client_x509_cert_url') }}"

universe_domain: googleapis.com

Pro tip: Use Mage-AI's secret manager to securely store and manage your service account credentials rather than hardcoding them in your pipeline.

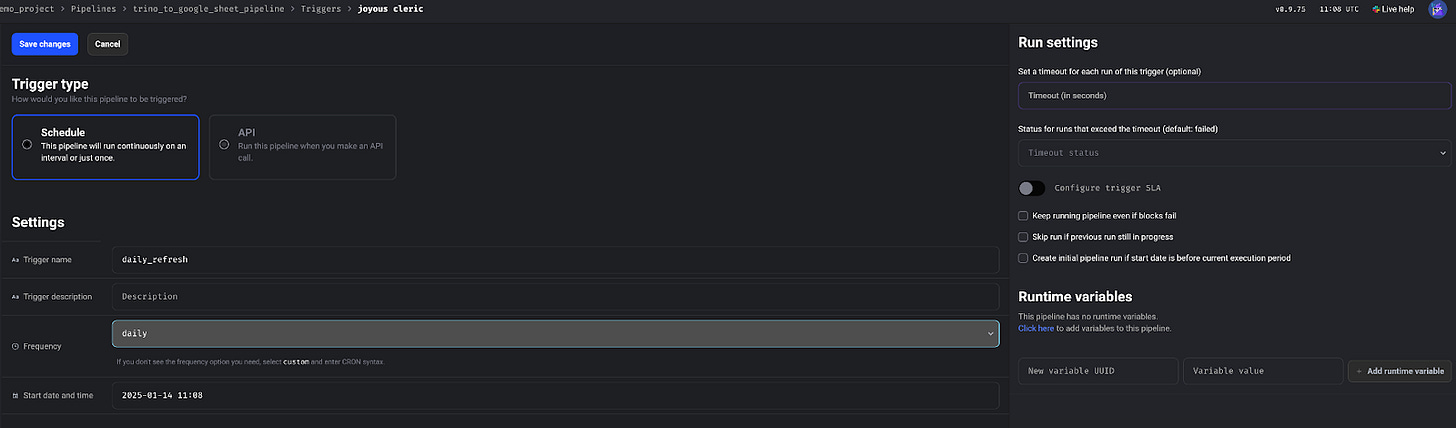

Scheduling Your Pipeline

Scheduling is easy in Mage-AI:

Click the Trigger icon in the left side menu

Create new trigger

Set your schedule preferences in the form (like this screenshot)

That's it! Your pipeline will now run automatically on schedule.

Limitations and Considerations

Team Collaboration:

Local development raises questions about code sharing

Need a strategy for pipeline version control

Team members need a way to review and contribute

Production Deployment:

The local laptop isn't suitable for scheduled jobs

Need a remote server for reliable scheduling

Questions around:

Pipeline deployment process

Server setup and maintenance

Access control and security

Monitoring and alerts

These limitations highlight the need for a clear path from local development to production deployment, especially for teams moving beyond individual use cases.

Tips: Mage-AI offers a Pro cloud version that addresses these enterprise needs with features for team collaboration, deployment management, and production-grade scheduling.

Future Exploration

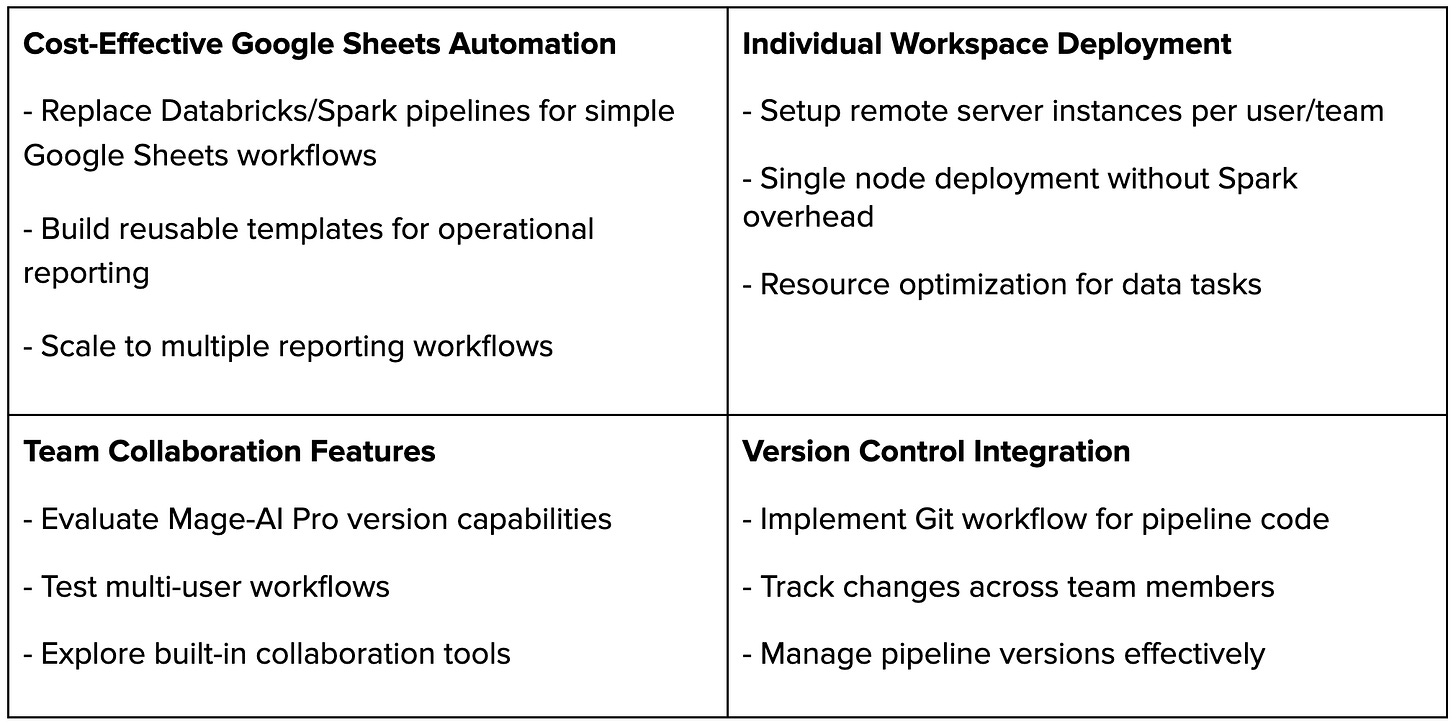

Here are key areas worth exploring with Mage-AI:

These exploration areas focus on practical ways to maximize Mage-AI's value while addressing real team needs.

Conclusion

Mage-AI is a powerful tool for simplifying data pipeline development, especially for operational teams working with Google Sheets. Its local-first approach, intuitive UI, and rich connector ecosystem make it an excellent alternative to heavyweight solutions like Databricks for straightforward data workflows.

This exploration shows how a simple three-step pipeline can replace complex notebook-based solutions, making data automation accessible to non-technical users. The ability to run everything on a laptop while maintaining professional-grade features opens new possibilities for teams looking to streamline their data operations.

While there are considerations around production deployment and team collaboration, Mage-AI's path forward with Pro features and active development makes it a promising tool worth exploring for your data workflow needs.

Ready to try it yourself? Check out the complete code and examples from this exploration at this GitHub repo.